FabImage® Studio Professional

Software for Machine Vision Engineers

Key advantages

- No low-level programming knowledge required.

- Data-flow based software.

- Fast and optimized algorithms.

- 1000+ high performance functions.

- Custom machine vision filters.

FabImage® Studio Professional is data-flow based software designed for machine vision engineers. It does not require any programming skills, but it is still so powerful that it can win even with solutions based on low-level programming libraries.

Also, the architecture is highly flexible, ensuring that users can easily adapt the product to the way they work and to specific requirements of any project.

Real-world application examples and case histories

Introduction

Deep Learning Add-on is a breakthrough technology for machine vision. It is a set of five ready-made tools which are trained with 20-50 sample images, and which then detect objects, defects or features automatically. Internally it uses large neural networks designed and optimized for use in industrial vision systems.

Together with FabImage Studio Professional you are getting a complete solution for training and deploying modern machine vision applications.

Key Facts

Training Data

Learns from few samples

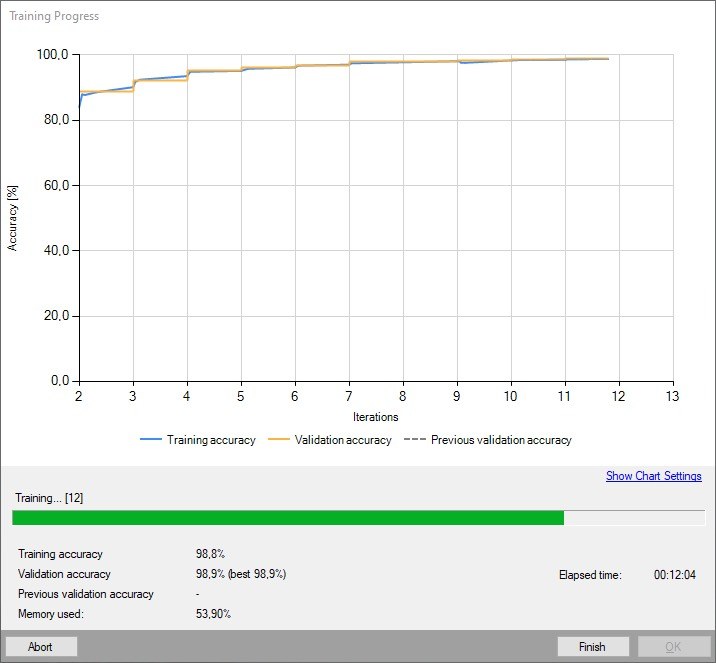

Typical applications require between 20 and 50 images for training. The more the better, but our software internally learns key characteristics from a limited training set and then generates thousands of new artificial samples for effective training.

Hardware Requirements

Works on GPU and CPU

A modern GPU is required for effective training. At production, you can use either GPU or CPU. GPU will typically be 3-10 times faster (with the exception of Object Classification which is equally fast on CPU).

Speed

The highest performance

Typical training time on a GPU is 5-15 minutes. Inference time varies depending on the tool and hardware between 5 and 100 ms per image. The highest performance is guaranteed by an internally developed industrial inference engine.

Training Procedure

1. Collect and normalize images

- Acquire between 20 and 50 images (the more the better), both Good and Bad, representing all possible object variations; save them to disk.

- Make sure that the object scale, orientation and lighting are as consistent as possible.

Training

- Open FabImage Studio Professional and add one of the Deep Learning Add-on tools.

- Open an editor associated with the tool and load your training images there.

- Label your images or add markings using drawing tools.

- Click “Train”.

Training and Validation Sets

In deep learning, as in all fields of machine learning, it is very important to follow correct methodology. The most important rule is to separate the Training set from the Validation set. The Training set is a set of samples used for creating a model. We cannot use it to measure the model’s performance, as this often generates results that are overoptimistic. Thus, we use separate data – the Validation set – to evaluate the model. Our Deep Learning Add-on automatically creates both sets from the samples provided by the user.

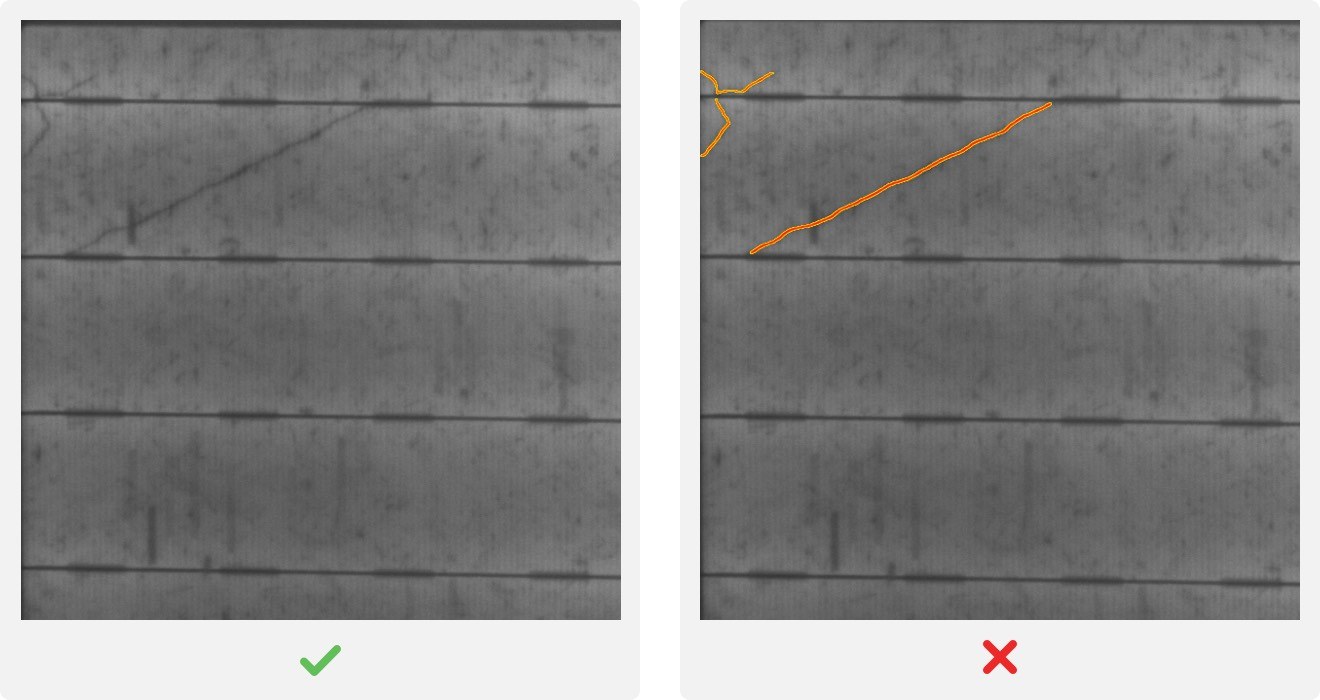

Feature detection

In the supervised mode the user needs to carefully label pixels corresponding to defects on the training images. The tool then learns to distinguish good and bad features by looking for their key characteristics.

Photovoltaics Inspection

In this application cracks and scratches must be detected on a surface that includes complicated features. With traditional methods, this requires complicated algorithms with dozens of parameters which must be adjusted for each type of solar panel. With Deep Learning, it is enough to train the system in the supervised mode, using just one tool.

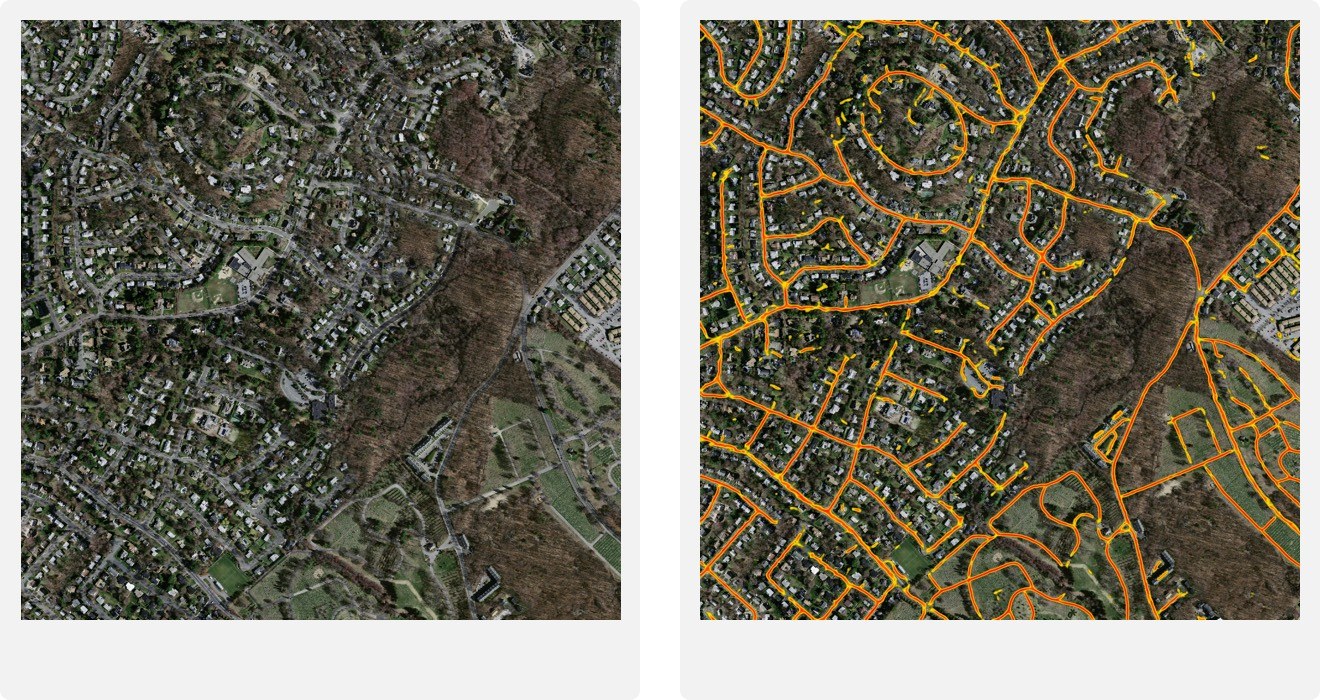

Satellite Image Segmentation

Satellite images are difficult to analyse as they include a huge variety of features. Nevertheless, our Deep Learning Add-on can be trained to detect roads and buildings with very high reliability. Training may be performed using only one properly labeled image, and the results can be verified immediately. Add more samples to increase the robustness of the model.

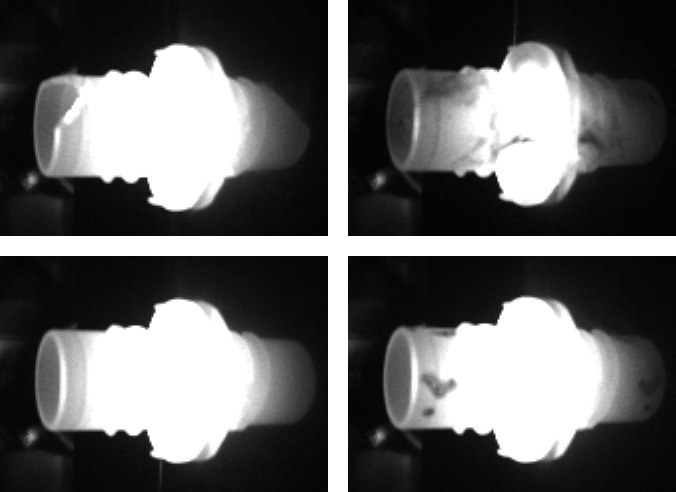

Anomaly Detection

In the unsupervised mode training is simpler. There is no direct definition of a defect – the tool is trained with Good samples and then looks for deviations of any kind.

Package Verification

When a sushi box is delivered to a market, each of the elements must be correctly placed at a specific position. Defects are difficult to define in the case where slight variations are also considered acceptable. The solution is to use unsupervised deep learning mode that detects any significant variations from what the tool has seen and learned in the training phase.

Plastics, injection moulding

Injection moulding is a complex process with many possible production problems. Plastic objects may also include some bending or other shape deviations that are acceptable for the customer. Our Deep Learning Add-on can learn all acceptable deviations from the provided samples and then detect anomalies of any type when running on the production line.

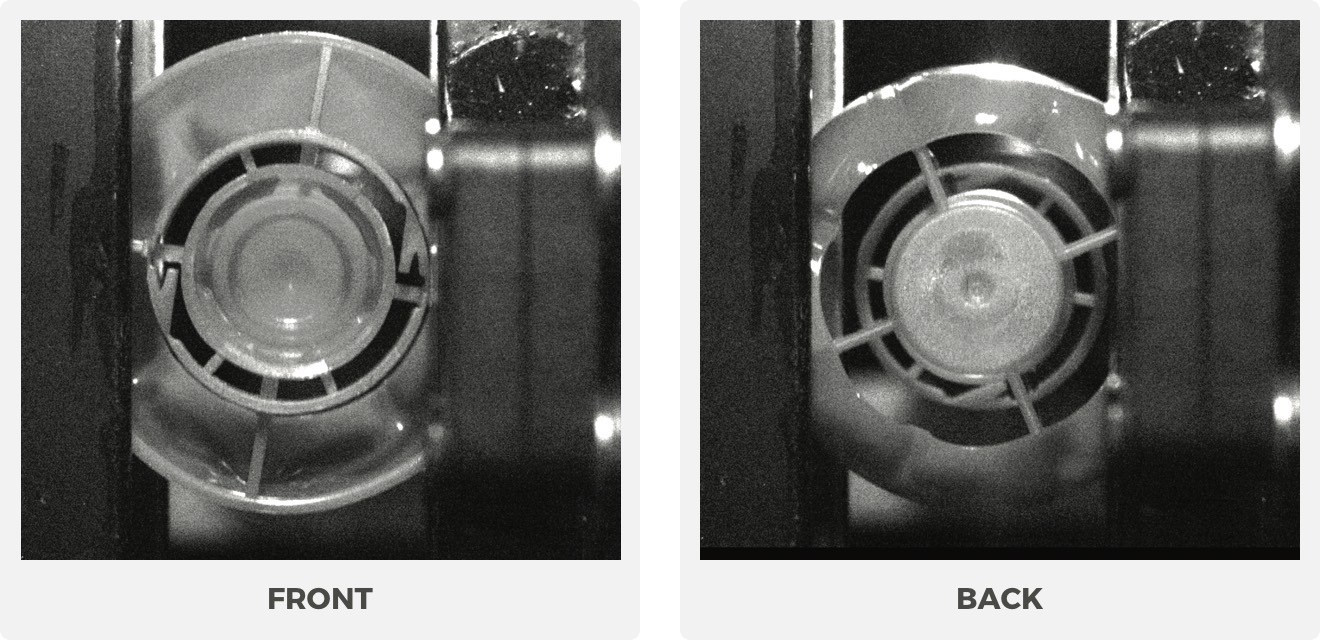

Object Classification

The Object Classification tool divides input images into groups created by the user according to their particular features. As a result the name of a class and the classification confidence are given.

Caps: Front or Back

Plastic caps may sometimes accidently flip in the production machine. The customer wants to detect this situation. The task can be completed with traditional methods, but requires an expert to design a specific algorithm for this application. On the other hand we can use deep learning based classification which automatically learns to recognize Front and Back from a set of training pictures.

3D Alloy Wheel Identification

There may be hundreds of different alloy wheel types being manufactured at a single plant. Identification of a particular model with such quantities of models is virtually impossible with traditional methods. Template Matching would need huge amount of time trying to match hundreds of models while handcrafting of bespoke models would simply require too much development and maintenance. Deep learning comes as an ideal solution that learns directly from sample pictures without any bespoke development.

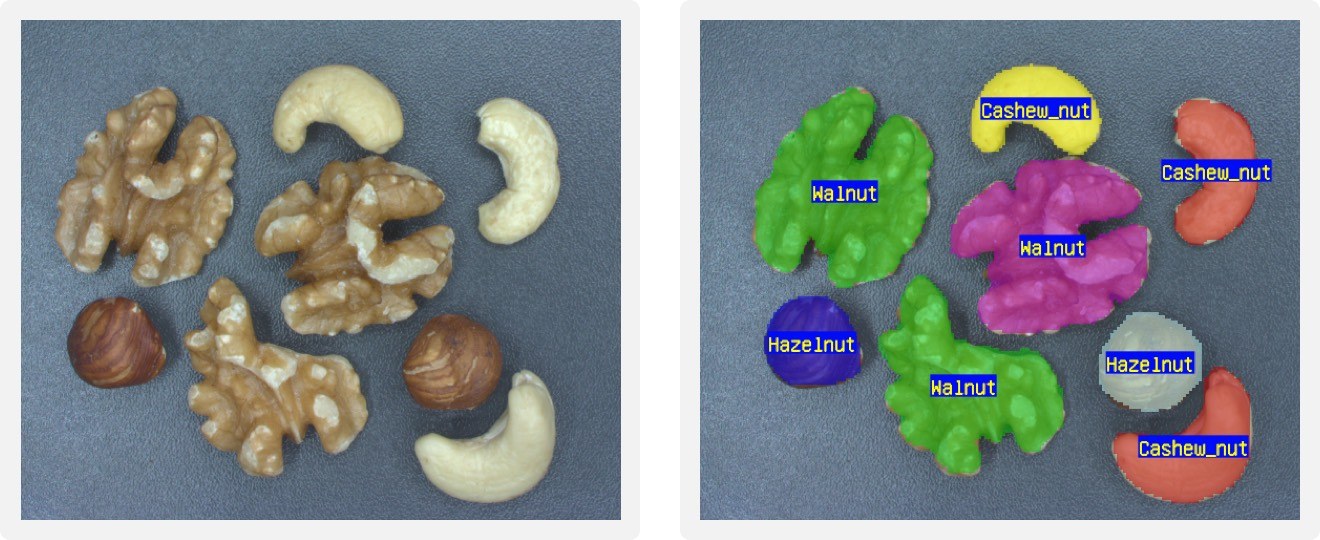

Instance Segmentation

The instance segmentation technique is used to locate, segment and classify single or multiple objects within an image. Unlike the feature detection technique, this technique detects individual objects and may be able to separate them even if they touch or overlap.

Nuts Segmentation

Mixed nuts are a very popular snack food consisting of various types of nuts. As the percentage composition of nuts in a package shall be in accordance with the list of ingredients printed on the package, the customers want to be sure that the proper amount of nuts of each type is going to be packaged. Instance segmentation tool is an ideal solution in such application, since it returns masks corresponding to the segmented objects.

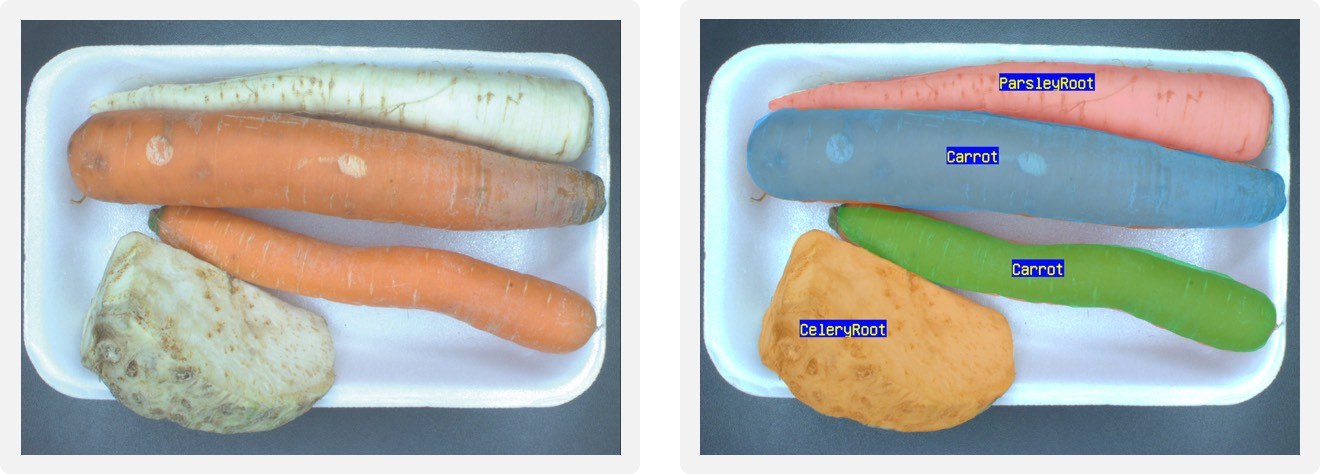

Package Verification

A typical set of soup greens used in Europe is packaged on a white plastic tray in a random position. Production line workers may sometimes accidentally forget to put one of the vegetables on the tray. Although there is a system that weighs the trays, the customer wants to verify completeness of the product just before the sealing process. As there are no two vegetables that look the same, the solution is to use deep learning-based segmentation. In the training phase, the customer just has to mark regions corresponding to vegetables.

Point Location

The Point Location tool looks for specific shapes, features or marks that can be identified as points in an input image. It may be compared to traditional template matching, but here the tool is trained with multiple samples and becomes robust against huge variability of the objects of interest.

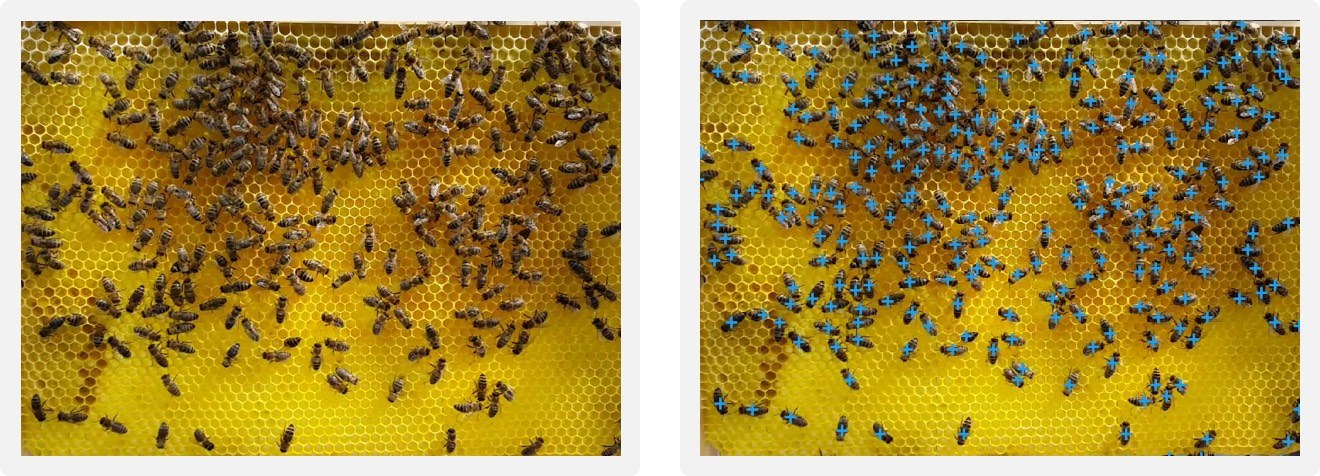

Bees Tracing

The task that seems impossible to achieve with traditional methods of image processing can be done with our latest tool. In this case we use it to detect bees. After that, we can determine if they are infected with varroosis - a disease caused by parasitic mites that infest honey bees. The parasite attaches to their bodies and upon the basis of a characteristic red inflammation we can classify them according to their health condition. Not only does this example show that it is an easy solution for a complex task, but also that we are open to many different branches of industry e.g. agriculture.

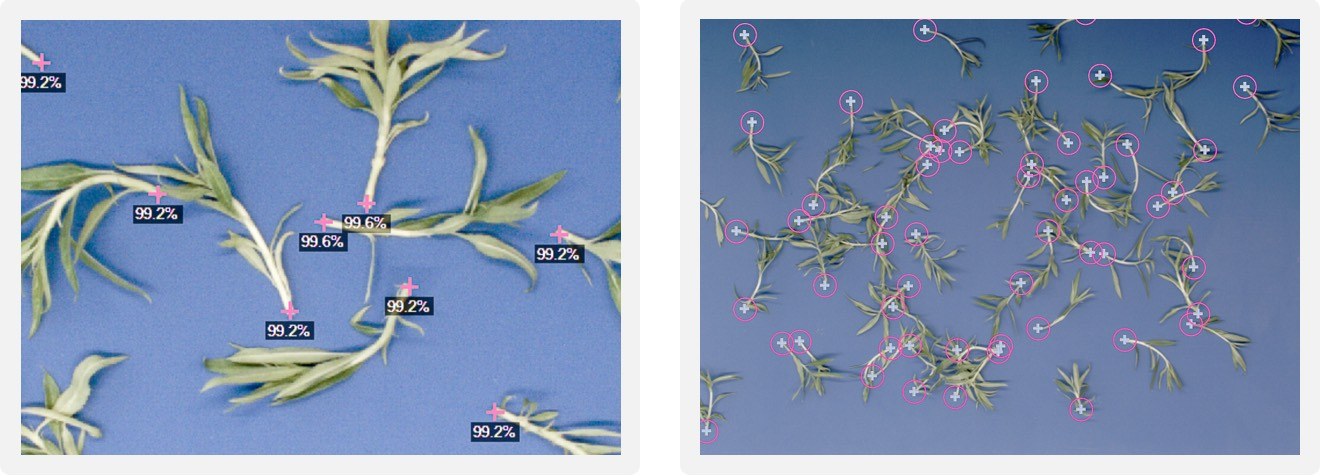

Pick and Place

In these applications we need to guide a robotic arm to pick up items, most typically from a conveyor belt or from a container. A good example of such application is picking small stem cuttings and then placing them vertically in pots. Any inaccuracies in detection may result in planting them too deep or upside down, which will result in cuttings not forming roots. Our deep learning tools make it possible to quickly locate the desired parts of the plants and provide accurate results required for this operation.

Three types of Licenses are available:

Developer Licenses: Licenses required to develop a vision program

Runtime Licenses: Licenses required to run a vision program. To purchase a Runtime License, you must have purchased a Developer License.

Add-on Licenses: Additional Licenses that allow you to expand the functionality of the other two types of Licenses.

Developer Licenses

Basic License

The Developer License is assigned to a single developer user and can be activated only via USB dongle.

Free Technical Support services are included for the first 12 months after activation, such as:

- Most up-to-date version of the software with associated new features and documentation*

- Answers, via email, to technical questions related to the use of the software

| * To obtain the most up-to-date version of the software, you must send Opto Engineering the WIBU file associated with the USB dongle of the License you wish to have the upgrade on. Learn more about how and where to download the WIBU file at https://docs.fab-image.com/stu... | |||

P/N |

Description |

Category |

Features |

FIS-PRO |

FabImage® Studio |

Developer Basic License |

Development environment (IDE) for programming in graphical form.

|

USB-DONGLE-FI |

USB Dongle |

Hardware |

Required to activate the License via hardware USB dongle. |

ADD-ON Licenses

ADD-ON Licenses** are additional Licenses that allow you to expand the functionality of the Basic License. To purchase ADD-ON Licenses, you must have previously purchased a Developer FabImage® Studio (FIS-PRO) License.

| ** To order an ADD-ON License, you must also send the WIBU file associated with the USB dongle of the developer for which you wish to activate the add-on. Read more about how and where to download the WIBU file at this link | |||

| *** It is not possible to build multiple macrofilters with Deep Learning that work in parallel. | |||

P/N |

Description |

Category |

Features |

FIS-CODE-ADD |

Code Generator for those who have purchased FabImage® Studio and also want to use FabImage® Library Suite libraries |

Developer ADD-ON License |

Enables the user to transform applications made in FabImage® Studio Professional (FIS-PRO) into C++ code. The package of FabImage® Studio + Code Generator ADD-ON (FIS-PRO + FIS-CODE-ADD) allows users to integrate and take advantage of the FabImage® Library Suite (FIL-SUI). The user can employ the development environment for either graphical programming or direct programming in C++ or .NET. To run the application it is sufficient to purchase a Runtime license (FIS-RUN or FIL-RUN). |

FIS-PAR-ADD |

Parallel Processing ADD-ON for those who have purchased FabImage® Studio (FIS-PRO) |

Developer ADD-ON License |

Allows the user to:

|

FI-DL-ADD |

FabImage® Deep Learning ADD-ON for those who have already purchased FabImage® Studio (FIS-PRO) |

Developer ADD-ON License |

Allows the user to use Deep Learning Tools ***. |

Runtime Licenses

Runtime License SINGLE THREAD

The Runtime License is assigned to a single vision system and allows multi-camera acquisition and execution of processes (macrofilters) sequentially (single thread).

It can be activated via two options:

USB Dongle (USB-DONGLE-RUN)

Computer ID*

To purchase a Runtime License for a Single Thread, you must have purchased the FabImage® Studio Developer License (FIS-PRO).

| * In case the License is lost through damage to the Computer, to which it is assigned by Computer ID, it cannot be recovered and a new one must be purchased. Opto Engineering recommends the option to purchase the license via USB dongle. | |||

P/N | Description | Category | Features |

FIS-RUN | FabImage® Studio Runtime | Runtime License

| Allows you to run an unlimited number of processes (macrofilters) sequentially. A particular advantage offered by this License is that it makes it easy to introduce changes, such as modifying and reloading recipes, even directly on the production line |

USB-DONGLE-RUN | USB Dongle (Optional) | Hardware | The License is activated via USB dongle |

Runtime License MULTITHREADING

In order to run the Parallel Processing (FIS-PAR-ADD) features, you must purchase one of the following Runtime Licenses (these Runtimes replace the FabImage® Studio Single Thread Runtime (FIS-RUN)). To run an unlimited number of processes in parallel, it is recommended to purchase the Runtime License corresponding to the number of cores on the machine vision computer.

To purchase a Multithreading Runtime License, you must have purchased a Developer FabImage® Studio (FIS-PRO) and a Developer Parallel Processing ADD-ON License (FIS-PAR-ADD).

| * To run an unlimited number of processes in parallel, we suggest purchasing the Runtime License, which limits the number of PC cores | ||||

P/N |

Description |

Category |

Features |

|

FIS-RUN-CL-XX |

||||

FIS-RUN-CL-4 |

FabImage® Studio Runtime for a 4-core machine vision computer |

ADD-ON Runtime License for Parallel Processing |

Allows running an unlimited number of processes in parallel. Requires a PC with 4 cores. |

|

FIS-RUN-CL-6 |

FabImage® Studio Runtime for a 6-core machine vision computer |

ADD-ON Runtime License for Parallel Processing |

Allows running an unlimited number of processes in parallel. Requires a PC with 6 cores. |

|

FIS-RUN-CL-8 |

FabImage® Studio Runtime for a 8-core machine vision computer |

ADD-ON Runtime License for Parallel Processing |

Allows running an unlimited number of processes in parallel. Requires a PC with 8 cores. |

|

FIS-RUN-CL-16 |

FabImage® Studio Runtime for a 16-core machine vision computer |

ADD-ON Runtime License for Parallel Processing |

Allows running an unlimited number of processes in parallel. Requires a PC with 16 cores. |

|

FIS-RUN-TL-XX* |

||||

FIS-RUN-TL-2 |

FabImage® Studio Runtime limited to 2 Threads |

ADD-ON Runtime License for Parallel Processing |

Enables the use of PCs with any number of Cores. The number of parallel processes is limited to 2 threads. |

|

FIS-RUN-TL-4 |

FabImage® Studio Runtime limited to 4 Threads |

ADD-ON Runtime License for Parallel Processing |

Enables the use of PCs with any number of Cores. The number of parallel processes is limited to 4 threads. |

|

FIS-RUN-TL-6 |

FabImage® Studio Runtime limited to 6 Threads |

ADD-ON Runtime License for Parallel Processing |

Enables the use of PCs with any number of Cores. The number of parallel processes is limited to 6 threads. |

|

FIS-RUN-TL-8 |

FabImage® Studio Runtime limited to 8 Threads |

ADD-ON Runtime License for Parallel Processing |

Enables the use of PCs with any number of Cores. The number of parallel processes is limited to 8 threads. |

|

FIS-RUN-TL-16 |

FabImage® Studio Runtime limited to 16 Threads |

ADD-ON Runtime License for Parallel Processing |

Enables the use of PCs with any number of Cores. The number of parallel processes is limited to 16 threads. |

|

ADD-ON Runtime License DEEP LEARNING

In order to run the Deep Learning ADD-ON features, the following Runtime License must be purchased in addition to the FabImage® Studio Runtime (FIS-RUN).

To purchase the Deep Learning Runtime ADD-ON, you must have purchased a FabImage® Studio Developer License (FIS-PRO) and a Developer Deep Learning ADD-ON License (FI-DL-ADD).

| * Multiple GPU cards cannot be used for inference | |||

P/N | Description | Category | Features |

FIS-RUN-DL | FabImage® Studio Deep Learning Runtime ADD-ON | Deep Learning ADD-ON Runtime License.

| Enables the user to execute single-threaded Deep learning* |

Service License

After 12 months from the activation of the FabImage® Studio License (FIS-PRO) or Developer ADD-ON Licenses (FIS-CODE-ADD, FIS-PAR-ADD and FI-DL-ADD) the following service licenses are available to extend for an additional 12 months technical support services, which include:

- Most up-to-date version of the software with associated new features and documentation

- Answers, via email, to technical questions related to the use of the software

| * Parallel Add-on licenses get free updates once you purchase a FIS-EXT ** If you purchase ADD-EXT, you don’t need to purchase also FIS-EXT *** In order to purchase the DL-EXT, the FIS-EXT must also be purchased |

|||

P/N | Description | Category | Features |

FIS-EXT | FabImage® Studio EXTENSION

| Service License | License required for:

|

ADD-EXT | FabImage® Studio + Code Generator ADD-ON EXTENSION | Service License | License required for:

|

DL-EXT* | FabImage® Deep Learning ADD-ON EXTENSION | Service License | License required for:

|

If you don’t extend the technical support services, you can still:

•Use your Developer License (FIS-PRO) and Add-on Licenses (FIS-CODE-ADD, FIS-PAR-ADD, and FI-DL-ADD) but only at the latest version available when the technical support expires

•Purchase and use Runtime Licenses (including Multithreading and Deep Learning ones) but only at the latest version available when the technical support expired

IMPORTANT

Opto Engineering releases 2 to 4 new versions of Developer Licenses annually so it is very likely that when support expires there will already be a new version available. For this reason, in addition to being able to benefit from our technical support, Opto Engineering always recommends purchasing Service Licenses.

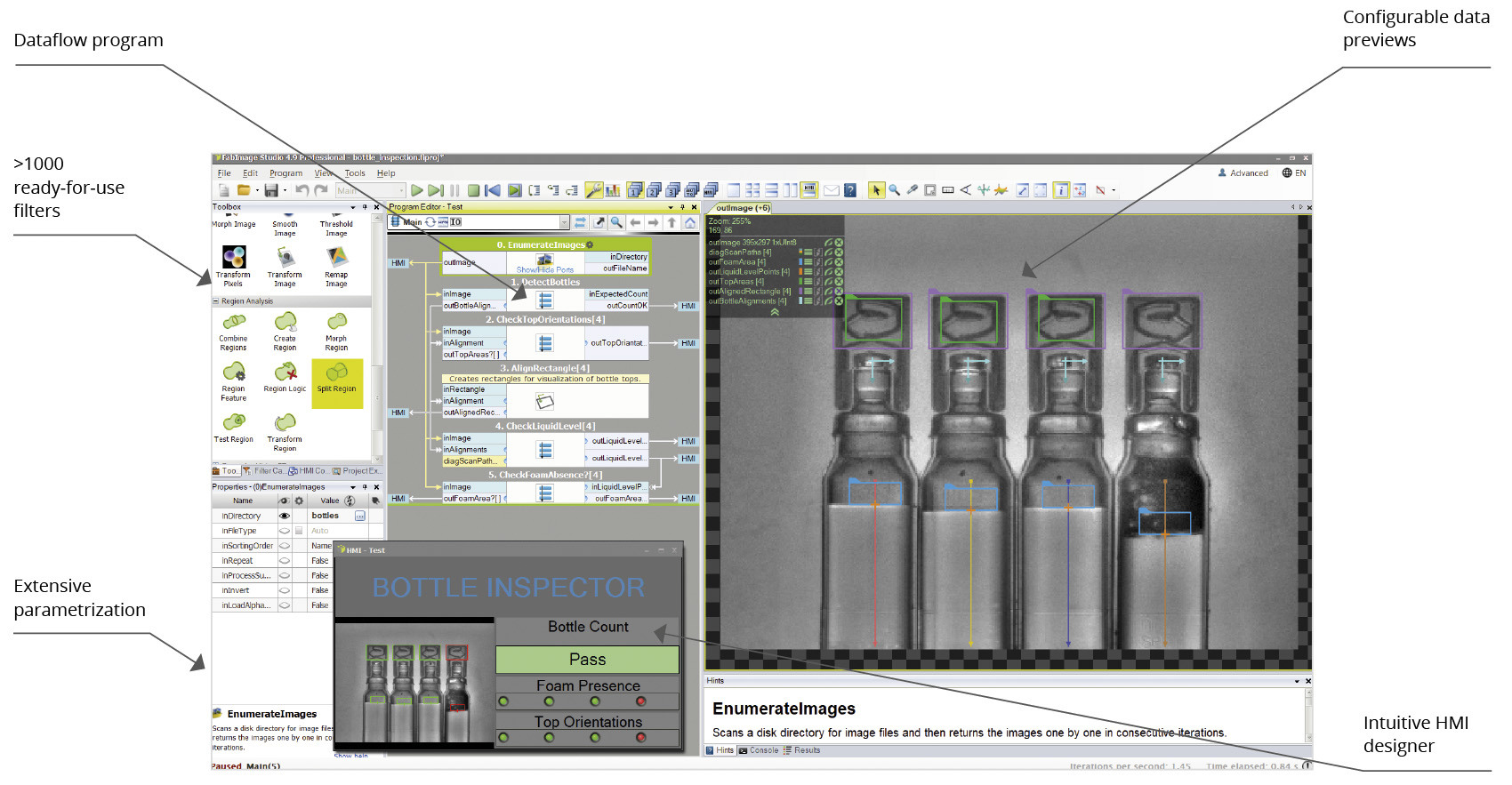

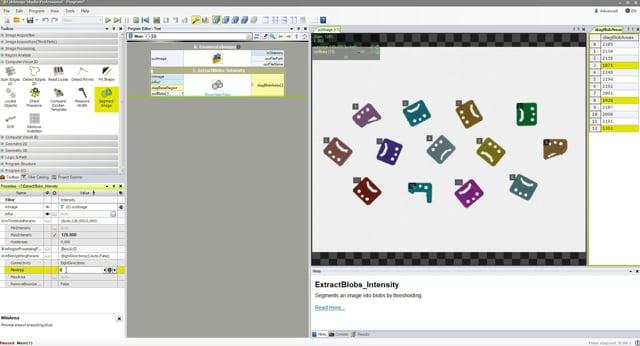

Intuitive

Drag & Drop

All programming is done by choosing filters and connecting them with each other. You can focus all your attention on computer vision.

You Can See Everything

Inspection results are visualized on multiple configurable data previews; and when a parameter in the program is changed, you can see the previews updated in real time.

HMI Designer

You can easily create custom graphical user interfaces and thus build the entire machine vision application using a single software package.

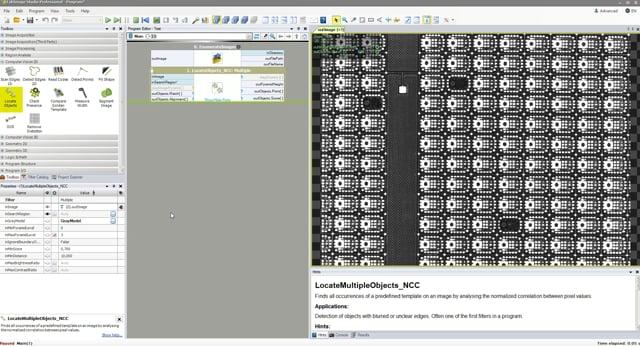

Powerful

Over 1000 Ready-for-Use Filters

There are over 1000 ready-for-use machine filters tested and optimized on hundreds of applications. They have many advanced capabilities such as outlier suppression, subpixel precision or any-shape region-of-interest.

Hardware Acceleration

The filters are aggressively optimized for the SSE technology and for multicore processors. Our implementations are ones of the fastest in the world!

Loops and Conditions

Without writing a single line of code, you can create custom and scalable program flows. Loops, conditions and subprograms (macrofilters) are realized with appropriate data-flow constructs in the graphical way.

Adaptable

GigE Vision and GenTL Support

FabImage® Studio is a GigE Vision compliant product, supporting the GenTL interface, as well as a number of vendor-specific APIs. Thus, you can use it with Opto Engineering® cameras and most cameras available on the market, including models from Matrix Vision, Allied Vision, Basler, Baumer, Dalsa, PointGrey, Photon Focus and XIMEA and more.

User Filters

You can use user filters to integrate your own C/C++ code with the benefits of visual programming.

C++ Code Generator

Programs created in FabImage® Studio can be exported to C++ code or to .NET assemblies. This makes it very easy to integrate your vision algorithms with applications created in C++, C# or VB programming languages.

Application cases

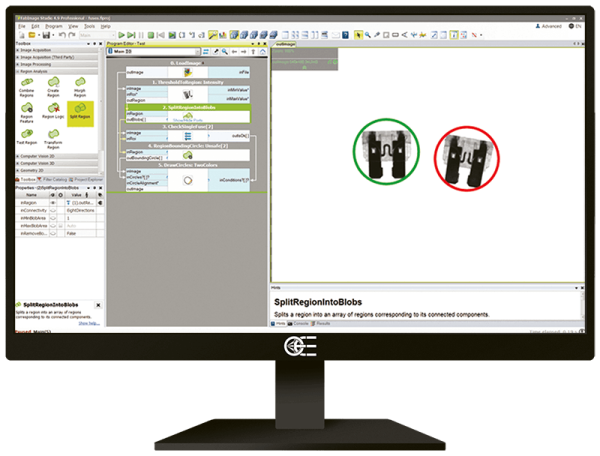

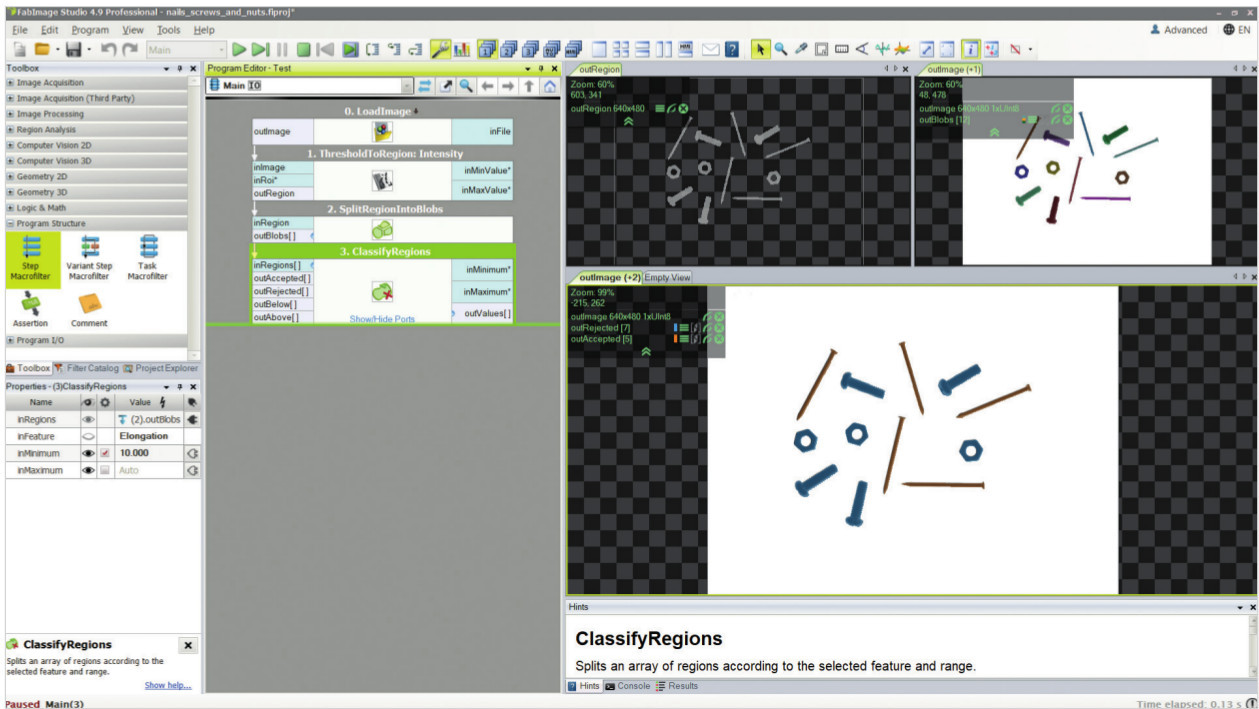

In this application, we need to sort nails amongst nuts and bolts. The image is thresholded and the resulting regions are split into blobs; finally, the blobs are classified by their elongation and the nails are easily found.

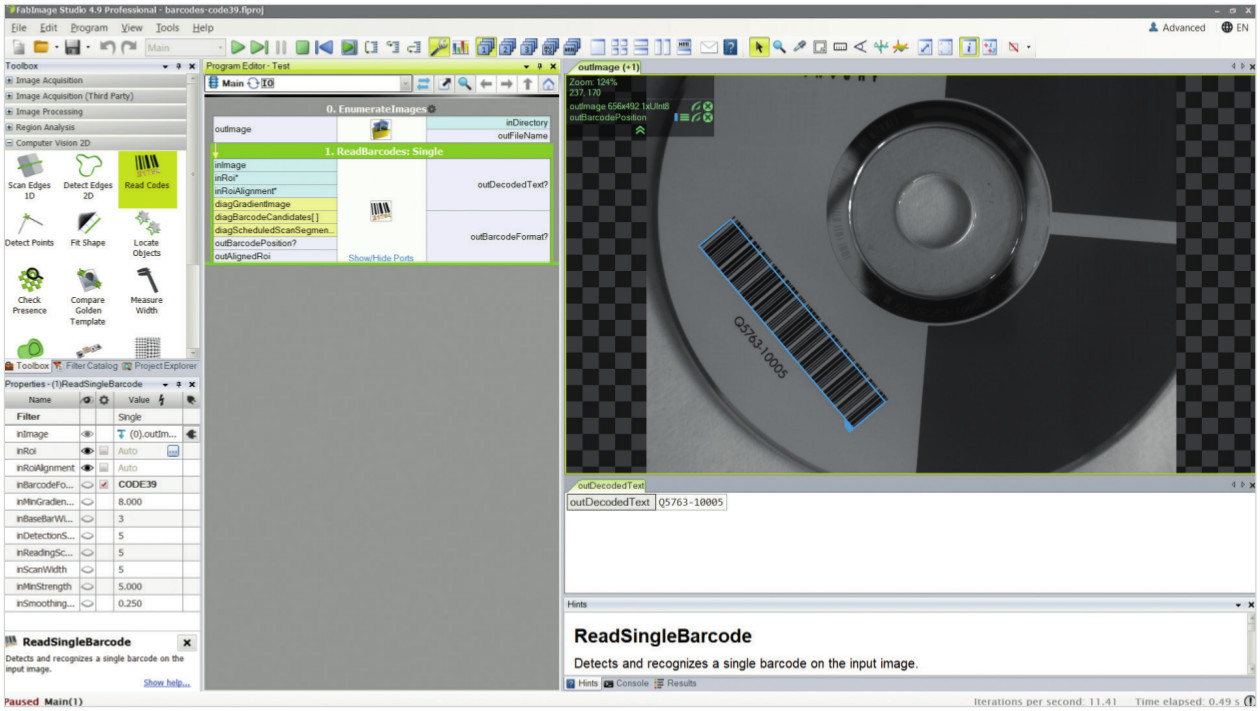

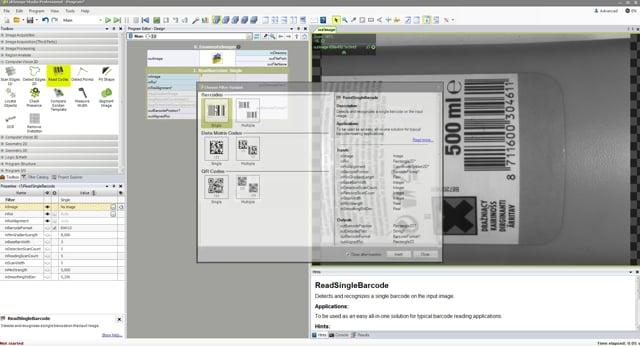

This example shows a basic ReadBarcodes filter. The tool automatically find the barcode and gives as output the decoded text.